データセットは,「そのままモデルの入力になるデータセット」として作り上げる。

「画像リストの CSVファイルの読み込み」, 「画像の整形 (変換)」で行ったことは,一回性のものであり,データセットとなって残るというものではない。

以下,データセットの構築:

1. all_image_paths.csv

画像ファイル

image0.jpg (5x3 画素)

image1.jpg (3x5 画素)

image2.jpg (4x3 画素)

が,つぎのように配置されている:

(パス)

├ category0

│ └ image0.jpg

└ category1

├ image1.jpg

└ image2.jpg |

これに対し,画像リストの CSVファイル all_image_paths.csv を作成する:

レコードの項目を,つぎの2つとする:

(1) 画像ファイルのパス

(2) ラベル (カテゴリー)

そこで all_image_paths.csv は,つぎの3行:

(パス)/category0/image0.jpg,0[改行コード(LF)]

(パス)/category1/image1.jpg,1[改行コード(LF)]

(パス)/category1/image2.jpg,1[改行コード(LF)]

python インタラクティブ・シェルで作業する。

$ source [パス]/venv/bin/activate

(venv) $ python

>>> import tensorflow as tf

>>> tf.enable_eager_execution()

2. all_image_paths, all_image_labels

>>> csv_name = '(パス)/all_image_paths.csv'

>>> csvfile = open(csv_name, 'r')

>>> import csv

>>> reader = csv.reader(csvfile, delimiter=',')

>>> all_image_paths = []

>>> all_image_labels = []

>>> for row in reader:

... all_image_paths.append(row[0])

... all_image_labels.append(row[1])

...

>>> csvfile.close()

>>> all_image_paths

['/home/pi/project/ai/ex/csv/category0/image0.jpg',

'/home/pi/project/ai/ex/csv/category1/image1.jpg',

'/home/pi/project/ai/ex/csv/category1/image2.jpg']

>>> all_image_labels

['0', '1', '1']

>>>

3. 関数 load_and_preprocess_image

- データの画像は,サイズがまちまちである。

モデルに合わせて,これらを定形にリサイズする。

── ここでは,5x3, 3x5, 4x3 の3つの画像を 3x3 にリサイズ。

- 画素値の範囲 [0, 255] を,モデルに合わせて,[m, n] に変換する。

── ここでは,範囲 [0, 1] に変換。

>>> def raw_to_tensor(path):

... image_raw = tf.io.read_file(path)

... return tf.image.decode_image(image_raw, channels=3)

...

>>> def load_and_preprocess_image(path):

... image_tensor = raw_to_tensor(path)

... image_final = tf.image.resize(image_tensor, [3, 3])

... image_final /= 255.0

... return image_final

...

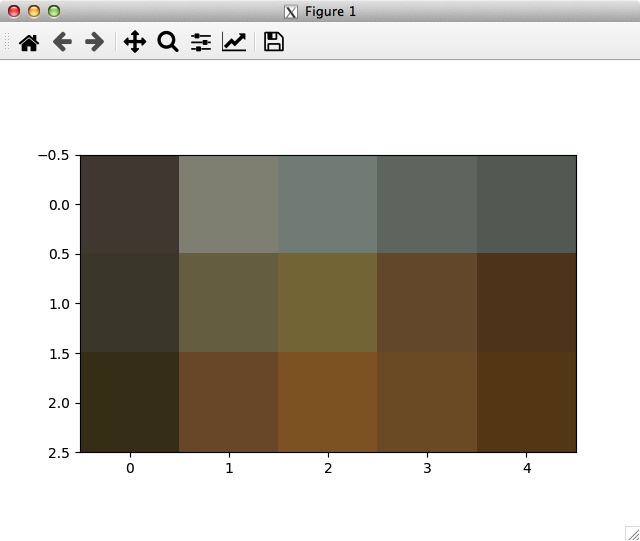

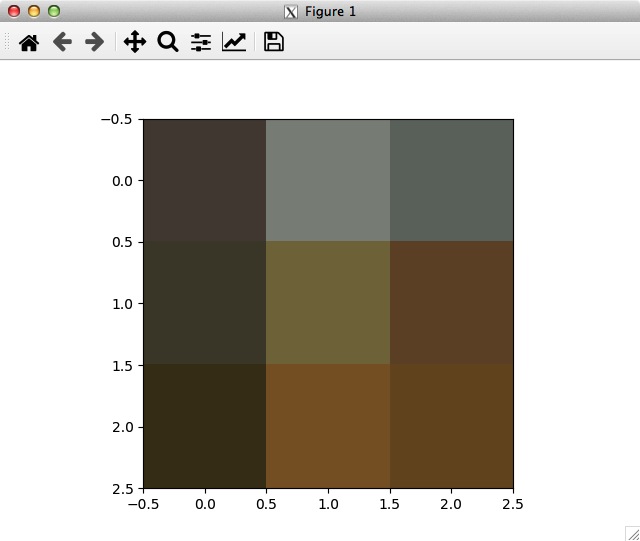

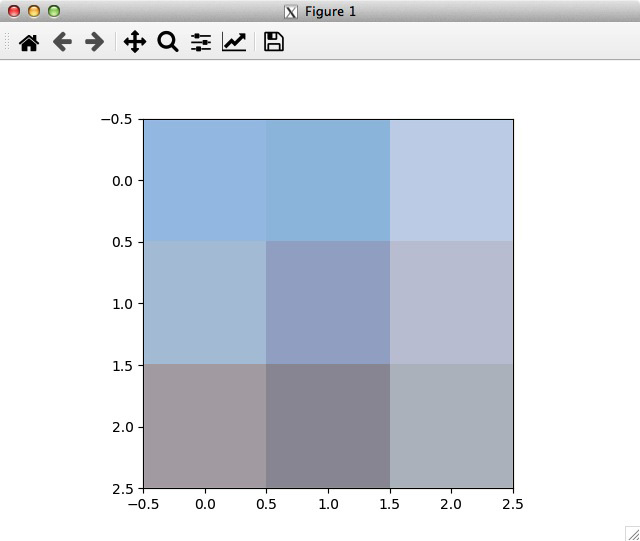

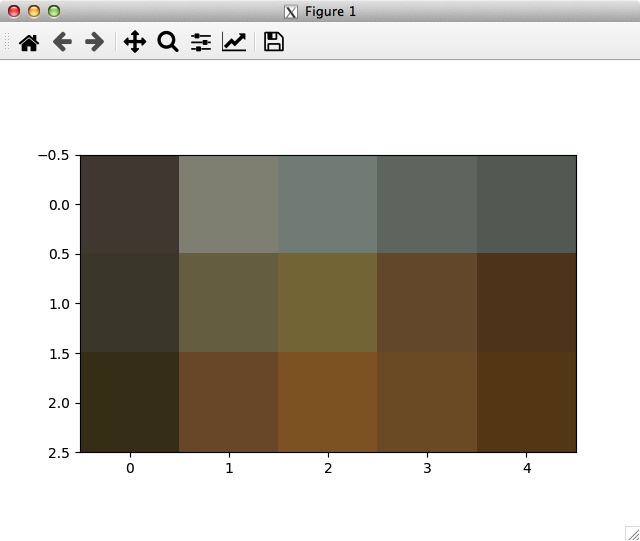

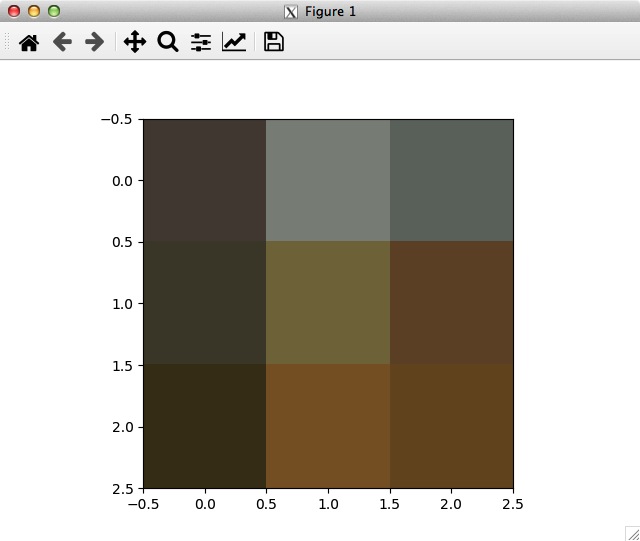

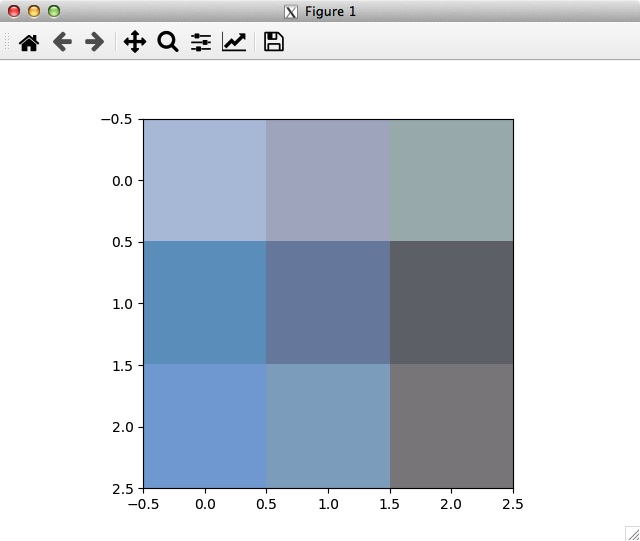

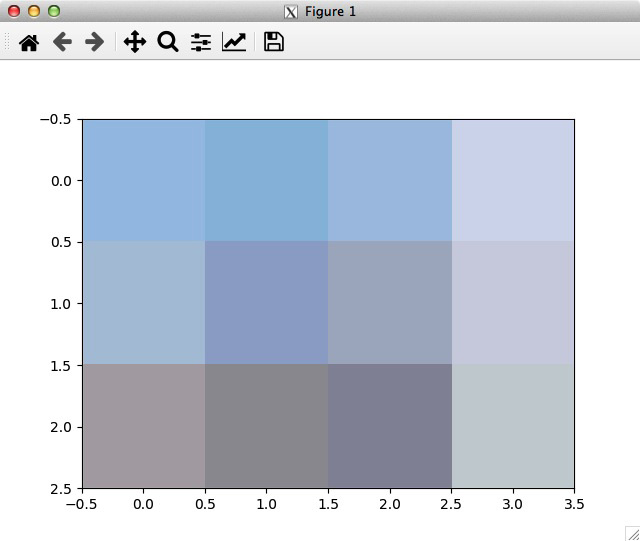

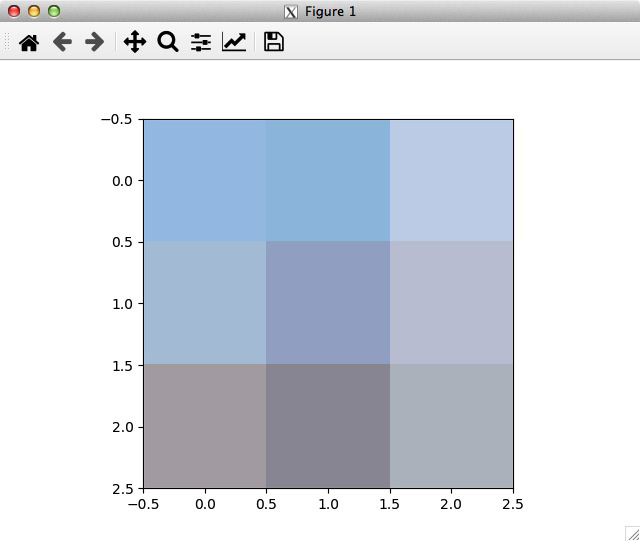

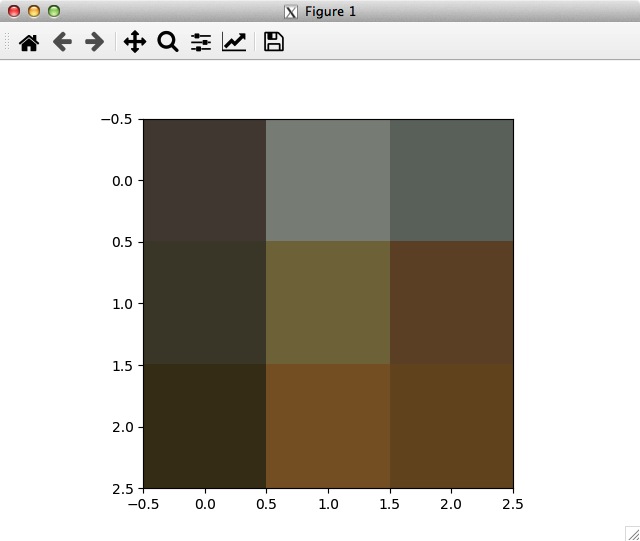

- 関数 load_and_preprocess_image による画像変換を確認:

>>> import matplotlib.pyplot as plt

>>> for path in all_image_paths:

... image_tensor = raw_to_tensor(path)

... plt.imshow(image_tensor)

... plt.show()

... image_final = load_and_preprocess_image(path)

... plt.imshow(image_final)

... plt.show()

...

<matplotlib.image.AxesImage object at 0x526b1470>

<matplotlib.image.AxesImage object at 0x524b50d0>

<matplotlib.image.AxesImage object at 0x524eed70>

<matplotlib.image.AxesImage object at 0x524a7f90>

<matplotlib.image.AxesImage object at 0x5246eb30>

<matplotlib.image.AxesImage object at 0x51f14cd0>

4. path_ds, label_ds

>>> path_ds = \

... tf.data.Dataset.from_tensor_slices(all_image_paths)

>>> path_ds

<DatasetV1Adapter shapes: (), types: tf.string>

>>> for i in path_ds:

... print(i)

...

tf.Tensor(b'(パス)/category0/image0.jpg', shape=(), dtype=string)

tf.Tensor(b'(パス)/category1/image1.jpg', shape=(), dtype=string)

tf.Tensor(b'(パス)/category1/image2.jpg', shape=(), dtype=string)

>>> label_ds = \

... tf.data.Dataset.from_tensor_slices(all_image_labels)

>>> label_ds

<DatasetV1Adapter shapes: (), types: tf.string>

>>> for i in label_ds:

... print(i)

...

tf.Tensor(b'0', shape=(), dtype=string)

tf.Tensor(b'1', shape=(), dtype=string)

tf.Tensor(b'1', shape=(), dtype=string)

5. image_ds

>>> def load_and_preprocess_image(path):

... image_raw = tf.io.read_file(path)

... image_tensor = tf.image.decode_image(image_raw, channels=3, expand_animations=False)

... image_final = tf.image.resize(image_tensor, [3, 3])

... image_final /= 255.0

... return image_final

...

>>> AUTOTUNE = tf.data.experimental.AUTOTUNE

>>> image_ds = \

... path_ds.map(load_and_preprocess_image, num_parallel_calls=AUTOTUNE)

この後,つぎの WARNING メッセージが2回続く:

WARNING:tensorflow:AutoGraph could not transform

<function load_and_preprocess_image at 0x620a6a98> and will run it as-is.

Please report this to the TensorFlow team.

When filing the bug, set the verbosity to 10 (on Linux,

`export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: Unable to locate the source code of

<function load_and_preprocess_image at 0x620a6a98>.

Note that functions defined in certain environments,

like the interactive Python shell do not expose their source code.

If that is the case, you should to define them in a .py source file.

If you are certain the code is graph-compatible,

wrap the call using @tf.autograph.do_not_convert.

Original error: could not get source code

また, def load_and_preprocess_image の定義で,

tf.image.decode_image(image_raw, channels=3, expand_animations=False)

の「, expand_animations=False」が無いと,つぎのエラーメッセーが続く:

Traceback (most recent call last):

File "<stdin>", line 2, in <module>

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/data/ops/dataset_ops.py",

line 1993, in map self, map_func, num_parallel_calls, preserve_cardinality=False))

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/data/ops/dataset_ops.py",

line 3582, in __init__ use_legacy_function=use_legacy_function)

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/data/ops/dataset_ops.py",

line 2819, in __init__ self._function = wrapper_fn._get_concrete_function_internal()

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py",

line 2326, in _get_concrete_function_internal *args, **kwargs)

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py",

ine 2320, in _get_concrete_function_internal_garbage_collected graph_function, _, _ =

self._maybe_define_function(args, kwargs)

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py",

line 2628, in _maybe_define_function graph_function = self._create_graph_function(args, kwargs)

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/eager/function.py",

line 2517, in _create_graph_function capture_by_value=self._capture_by_value),

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/framework/func_graph.py",

line 943, in func_graph_from_py_func func_outputs = python_func(*func_args, **func_kwargs)

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/data/ops/dataset_ops.py",

ine 2812, in wrapper_fn ret = _wrapper_helper(*args)

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/data/ops/dataset_ops.py",

line 2754, in _wrapper_helper ret = autograph.tf_convert(func, ag_ctx)(*nested_args)

File "/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/autograph/impl/api.py",

line 237, in wrapperraise e.ag_error_metadata.to_exception(e)

ValueError: in converted code:

<stdin>:3 load_and_preprocess_image

/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/ops/image_ops_impl.py:

1226 resize_images skip_resize_if_same=True)

/home/pi/venv/lib/python3.7/site-packages/tensorflow_core/python/ops/image_ops_impl.py:

1073 _resize_images_common raise ValueError('\'images\' contains no shape.')

ValueError: 'images' contains no shape.

- image_ds ができていることを確認:

>>> for i in image_ds:

... print(i)

...

tf.Tensor(

[[[0.3098039 0.28235292 0.25098038]

[0.5424836 0.5555555 0.52941173]

[0.4339869 0.45359474 0.43006533]]

[[0.29019606 0.27843136 0.2117647 ]

[0.49281043 0.4575163 0.2980392 ]

[0.40653595 0.32418302 0.20261437]]

[[0.2588235 0.23137254 0.12156862]

[0.496732 0.38692808 0.20392156]

[0.42614383 0.33594772 0.17124183]]], shape=(3, 3, 3), dtype=float32)

tf.Tensor(

[[[0.7333333 0.772549 0.8705882 ]

[0.69019604 0.7098039 0.7843137 ]

[0.6823529 0.7215686 0.7294117 ]]

[[0.4954248 0.620915 0.77385616]

[0.4954248 0.5464052 0.669281 ]

[0.44183004 0.448366 0.47843134]]

[[0.5581699 0.66405225 0.8405228 ]

[0.5973856 0.67581695 0.7816993 ]

[0.5437909 0.53594774 0.54771245]]], shape=(3, 3, 3), dtype=float32)

tf.Tensor(

[[[0.4705882 0.36470586 0.18039215]

[0.496732 0.38039213 0.21437907]

[0.3215686 0.2732026 0.14640522]]

[[0.41568625 0.3490196 0.23137254]

[0.4980392 0.45620912 0.3215686 ]

[0.34640518 0.3307189 0.2457516 ]]

[[0.45490193 0.47450978 0.45098037]

[0.55032676 0.55163395 0.51241827]

[0.40130717 0.38169932 0.34248364]]], shape=(3, 3, 3), dtype=float32)

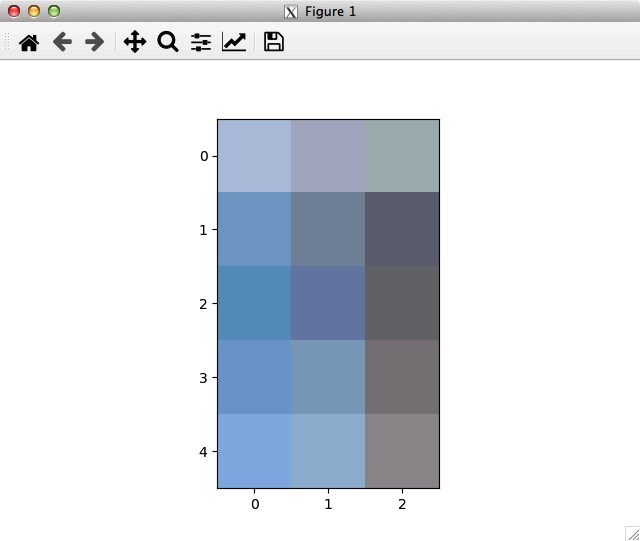

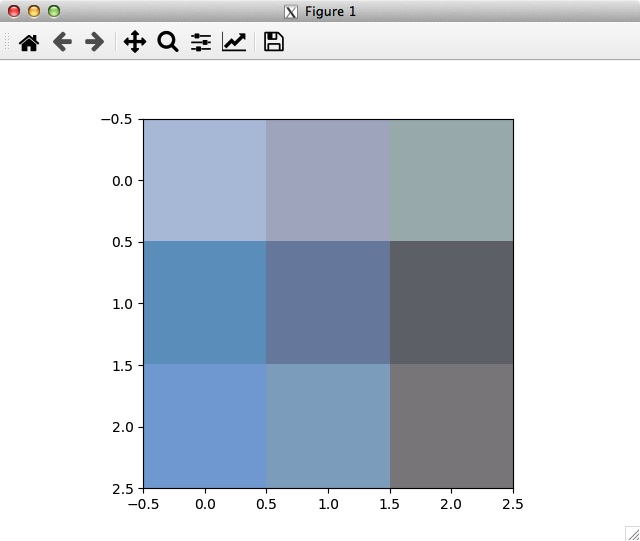

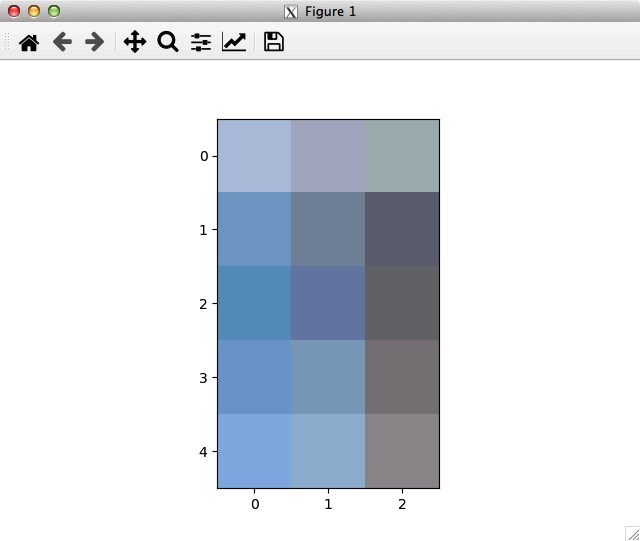

- image_ds の画像を表示:

>>> import matplotlib.pyplot as plt

>>> for image in image_ds:

... plt.imshow(image)

... plt.show()

...

<matplotlib.image.AxesImage object at 0x5b765210>

<matplotlib.image.AxesImage object at 0x50d748b0>

<matplotlib.image.AxesImage object at 0x693255b0>

6. image_label_ds

>>> image_label_ds \

... = tf.data.Dataset.zip((image_ds, label_ds))

>>> image_label_ds

<DatasetV1Adapter shapes: ((3, 3, 3), ()), types: (tf.float32, tf.string)>

>>> for i in image_label_ds:

... print(i)

...

(<tf.Tensor: shape=(3, 3, 3), dtype=float32, numpy=

array([[[0.3098039 , 0.28235292, 0.25098038],

[0.5424836 , 0.5555555 , 0.52941173],

[0.4339869 , 0.45359474, 0.43006533]],

[[0.29019606, 0.27843136, 0.2117647 ],

[0.49281043, 0.4575163 , 0.2980392 ],

[0.40653595, 0.32418302, 0.20261437]],

[[0.2588235 , 0.23137254, 0.12156862],

[0.496732 , 0.38692808, 0.20392156],

[0.42614383, 0.33594772, 0.17124183]]], dtype=float32)>,

<tf.Tensor: shape=(), dtype=string, numpy=b'0'>)

(<tf.Tensor: shape=(3, 3, 3), dtype=float32, numpy=

array([[[0.7333333 , 0.772549 , 0.8705882 ],

[0.69019604, 0.7098039 , 0.7843137 ],

[0.6823529 , 0.7215686 , 0.7294117 ]],

[[0.4954248 , 0.620915 , 0.77385616],

[0.4954248 , 0.5464052 , 0.669281 ],

[0.44183004, 0.448366 , 0.47843134]],

[[0.5581699 , 0.66405225, 0.8405228 ],

[0.5973856 , 0.67581695, 0.7816993 ],

[0.5437909 , 0.53594774, 0.54771245]]], dtype=float32)>,

<tf.Tensor: shape=(), dtype=string, numpy=b'1'>)

(<tf.Tensor: shape=(3, 3, 3), dtype=float32, numpy=

array([[[0.4705882 , 0.36470586, 0.18039215],

[0.496732 , 0.38039213, 0.21437907],

[0.3215686 , 0.2732026 , 0.14640522]],

[[0.41568625, 0.3490196 , 0.23137254],

[0.4980392 , 0.45620912, 0.3215686 ],

[0.34640518, 0.3307189 , 0.2457516 ]],

[[0.45490193, 0.47450978, 0.45098037],

[0.55032676, 0.55163395, 0.51241827],

[0.40130717, 0.38169932, 0.34248364]]], dtype=float32)>,

<tf.Tensor: shape=(), dtype=string, numpy=b'1'>)

7. shuffle

>>> image_count = len(all_image_paths)

>>> ds = image_label_ds.shuffle(buffer_size=image_count)

>>> ds

<DatasetV1Adapter shapes: ((3, 3, ?), ()), types: (tf.float32, tf.string)>

>>> for i in ds:

... print(i)

...

(<tf.Tensor: shape=(3, 3, 3), dtype=float32, numpy=

array([[[0.4705882 , 0.36470586, 0.18039215],

[0.496732 , 0.38039213, 0.21437907],

[0.3215686 , 0.2732026 , 0.14640522]],

[[0.41568625, 0.3490196 , 0.23137254],

[0.4980392 , 0.45620912, 0.3215686 ],

[0.34640518, 0.3307189 , 0.2457516 ]],

[[0.45490193, 0.47450978, 0.45098037],

[0.55032676, 0.55163395, 0.51241827],

[0.40130717, 0.38169932, 0.34248364]]], dtype=float32)>,

<tf.Tensor: shape=(), dtype=string, numpy=b'1'>)

(<tf.Tensor: shape=(3, 3, 3), dtype=float32, numpy=

array([[[0.3098039 , 0.28235292, 0.25098038],

[0.5424836 , 0.5555555 , 0.52941173],

[0.4339869 , 0.45359474, 0.43006533]],

[[0.29019606, 0.27843136, 0.2117647 ],

[0.49281043, 0.4575163 , 0.2980392 ],

[0.40653595, 0.32418302, 0.20261437]],

[[0.2588235 , 0.23137254, 0.12156862],

[0.496732 , 0.38692808, 0.20392156],

[0.42614383, 0.33594772, 0.17124183]]], dtype=float32)>,

<tf.Tensor: shape=(), dtype=string, numpy=b'0'>)

(<tf.Tensor: shape=(3, 3, 3), dtype=float32, numpy=

array([[[0.7333333 , 0.772549 , 0.8705882 ],

[0.69019604, 0.7098039 , 0.7843137 ],

[0.6823529 , 0.7215686 , 0.7294117 ]],

[[0.4954248 , 0.620915 , 0.77385616],

[0.4954248 , 0.5464052 , 0.669281 ],

[0.44183004, 0.448366 , 0.47843134]],

[[0.5581699 , 0.66405225, 0.8405228 ],

[0.5973856 , 0.67581695, 0.7816993 ],

[0.5437909 , 0.53594774, 0.54771245]]], dtype=float32)>,

<tf.Tensor: shape=(), dtype=string, numpy=b'1'>)

8. batch

モデル (NN) に学習用画像を喰わせるときは,BATCH_SIZE 枚ずつ喰わせる。

>>> BATCH_SIZE = 2

>>> ds = ds.batch(BATCH_SIZE)

>>>> ds

<DatasetV1Adapter shapes: ((?, 3, 3, ?), (?,)), types: (tf.float32, tf.string)>

>>> for i in ds:

... print(i)

...

(<tf.Tensor: shape=(2, 3, 3, 3), dtype=float32, numpy=

array([[[[0.4705882 , 0.36470586, 0.18039215],

[0.496732 , 0.38039213, 0.21437907],

[0.3215686 , 0.2732026 , 0.14640522]],

[[0.41568625, 0.3490196 , 0.23137254],

[0.4980392 , 0.45620912, 0.3215686 ],

[0.34640518, 0.3307189 , 0.2457516 ]],

[[0.45490193, 0.47450978, 0.45098037],

[0.55032676, 0.55163395, 0.51241827],

[0.40130717, 0.38169932, 0.34248364]]],

[[[0.3098039 , 0.28235292, 0.25098038],

[0.5424836 , 0.5555555 , 0.52941173],

[0.4339869 , 0.45359474, 0.43006533]],

[[0.29019606, 0.27843136, 0.2117647 ],

[0.49281043, 0.4575163 , 0.2980392 ],

[0.40653595, 0.32418302, 0.20261437]],

[[0.2588235 , 0.23137254, 0.12156862],

[0.496732 , 0.38692808, 0.20392156],

[0.42614383, 0.33594772, 0.17124183]]]], dtype=float32)>,

<tf.Tensor: shape=(2,), dtype=string, numpy=array([b'1', b'0'], dtype=object)>)

(<tf.Tensor: shape=(1, 3, 3, 3), dtype=float32, numpy=

array([[[[0.7333333 , 0.772549 , 0.8705882 ],

[0.69019604, 0.7098039 , 0.7843137 ],

[0.6823529 , 0.7215686 , 0.7294117 ]],

[[0.4954248 , 0.620915 , 0.77385616],

[0.4954248 , 0.5464052 , 0.669281 ],

[0.44183004, 0.448366 , 0.47843134]],

[[0.5581699 , 0.66405225, 0.8405228 ],

[0.5973856 , 0.67581695, 0.7816993 ],

[0.5437909 , 0.53594774, 0.54771245]]]], dtype=float32)>,

<tf.Tensor: shape=(1,), dtype=string, numpy=array([b'1'], dtype=object)>)

9. buffer

>>>> ds = ds.prefetch(buffer_size=image_count)

備考:つぎの shuffle は,エラーになる:

>>> ds = image_label_ds.apply(

... tf.data.Dataset.shuffle(buffer_size= image_count) )

Traceback (most recent call last):

File "<stdin>", line 2, in <module>

TypeError: shuffle() missing 1 required positional argument: 'self'

>>> tf.data.Dataset.shuffle(buffer_size= image_count)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

TypeError: shuffle() missing 1 required positional argument: 'self'

|